ARCH: A Framework for Accountability in the Age of AI in Healthcare

Introducing a new classification system to track, understand, and respond to AI-related complications with clinical rigor and transparency.

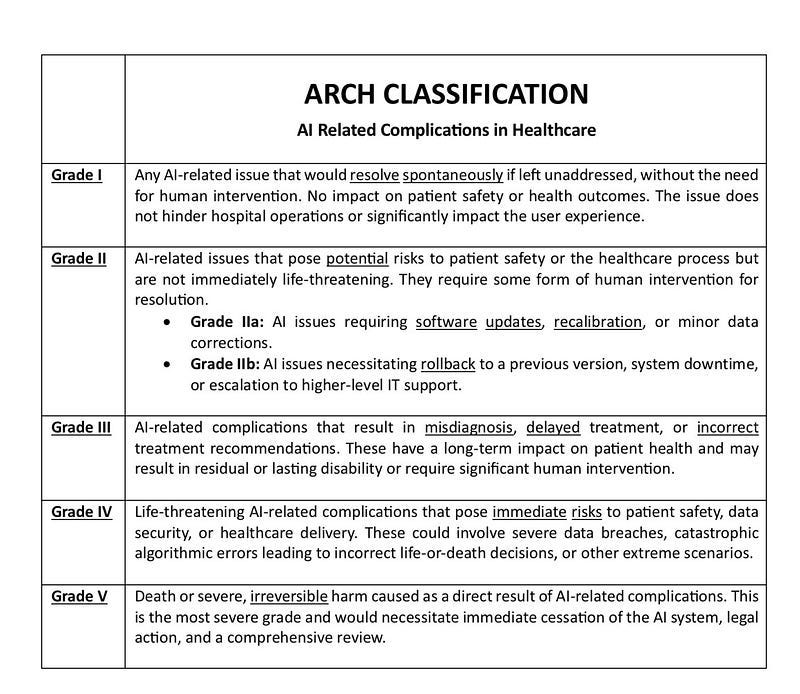

I’d like to introduce a new tool designed to help us better navigate one of the most urgent challenges in modern healthcare: how we understand, track, and respond to risks associated with artificial intelligence.It’s called the ARCH Classification System—a framework built to integrate AI-related risks and complications directly into the quality and safety infrastructure that healthcare systems already rely on.

ARCH was developed out of necessity. As AI continues to be adopted across clinical settings—from diagnostics to workflow automation—it brings with it a new set of challenges. Traditional risk classification systems, while effective in many contexts, weren’t designed to address the unique characteristics of AI systems. These tools often function as “black boxes,” making it difficult to trace causality, understand failure modes, or respond with clarity when something goes wrong.

ARCH fills this gap. It’s a structured, shared language for classifying AI-related complications that arise in clinical practice. Modeled on successful surgical classification systems like Codman, ARCH offers a transparent, scalable approach to identifying and managing harm, ranging from minor disruptions to severe patient outcomes.

Here’s how the classification works:

• Grade I: Minor AI-related events that resolve without intervention.

• Grade II: Risks that require human or technical intervention.

• IIa: Resolved through recalibration or software updates.

• IIb: Requires system rollback or higher-level IT escalation.

• Grade III: Significant impact on patient care requiring long-term clinical management.

• Grade IV: Life-threatening AI-related complications.

• Grade V: Events resulting in irreversible harm or death.

ARCH is not only for technologists. It’s built for clinical leaders, quality and safety officers, healthcare executives, and frontline providers—anyone invested in the safe and ethical integration of AI into clinical care. It’s also intended to serve as a common reference point for health systems, government agencies, and technology companies alike, providing a standard language to accompany emerging AI governance frameworks.

This is an open invitation.

We are seeking collaborators—across clinical, technical, and regulatory spaces—to help refine, implement, and strengthen this tool. If you work in healthcare innovation, if you’re part of a technology company designing AI solutions for health, or if you’re in policy or quality improvement, I encourage you to engage with ARCH.

Companies like Microsoft, Google Health, IBM iX, and others have a significant role to play in shaping how AI is deployed. But without a shared framework for risk classification, it’s difficult to move from principles to practice. ARCH is one attempt to bridge that gap.

There is no perfect system—but that is precisely why we must build one together. Your input can help make this tool smarter, safer, and more responsive to the realities of clinical care.

If we get this right, we don’t just mitigate harm. We build trust. We create the conditions for AI to improve care meaningfully, not hypothetically. And we demonstrate—through structure, not just intent—that patient safety remains the heart of innovation.

Let’s shape that future together.

Dr. Salim Afshar