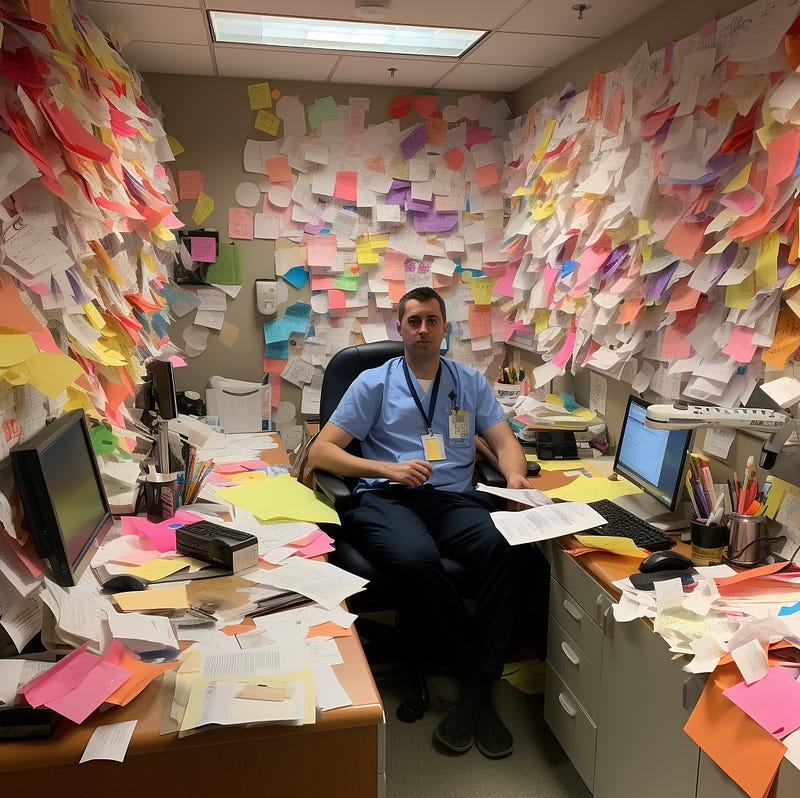

In the quiet spaces of hospitals—the corners of nurses’ stations, the side rooms filled with handwritten notes and clipped charts—you can see the weight of the system. It’s in the cluttered whiteboards, the reminders hastily taped to monitors, the exhausted faces of staff running from one task to the next. These are the everyday markers of a system stretched too thin, one where committed professionals are doing their best despite deep structural inefficiencies.

And yet, amid this daily reality, we continue to argue about whether we’re ready for artificial intelligence in healthcare. The debate is necessary. Concerns about safety, accountability, and unintended consequences must be addressed. But there’s something else we need to hold just as urgently: the fact that our current system, as it stands today, is already failing.

Every day, patients are harmed not by technology, but by delay, miscommunication, fragmented care, and overburdened providers. The system is complex, but the root problem isn’t ambiguity—it’s inertia. And in that space, AI doesn’t represent a distant or abstract threat. It represents a tool—one of many—that could begin to ease what has become unsustainable.

AI has the potential to do more than improve efficiency. It can help flag diagnostic errors, predict deterioration, personalize treatment planning, and support clinicians in making more informed decisions. But none of that happens in isolation. It has to be implemented with intention, with oversight, and most importantly, with people who understand both the promise and the limits of the technology.

That’s why I appreciated the conversations at this year’s HLTH Future & Health Summit, where I had the opportunity to join a panel on governing and managing generative AI in healthcare. The discussions weren’t about hype; they were grounded in the real tensions—how to build trust, how to govern wisely, how to create structures that allow for innovation without undermining safety.

These are the kinds of questions VALID.ai was created to address. Announced at HLTH, VALID.ai is a new member-led initiative bringing together health systems, payers, nonprofits, and technology leaders to build shared frameworks for using generative AI responsibly. It’s not a platform for rhetoric—it’s a working space for collective learning and open innovation.

As Ashish Atreja from UC Davis Health put it, the opportunity before us is not just to adopt technology, but to co-create the science of generative AI alongside diverse partners. That’s an invitation not only to technical collaboration, but to a deeper kind of engagement—one that listens to frontline workers, includes patients, and builds public trust from the ground up.

The real risk isn’t that we move too quickly. The risk is that we continue to endure the unacceptable conditions we already know too well. That we normalize preventable harm, delay, and frustration. That we protect the status quo under the guise of caution. At some point, we have to ask—what does responsible innovation really mean? To me, it means not deferring action indefinitely. It means stepping forward with humility, with transparency, and with an unshakeable commitment to those we serve.

The challenge before us is not to prove that AI is flawless. It’s to show that we are willing to lead—not just with technology, but with principle. Not just with regulation, but with courage.

That’s what this moment calls for. Not perfection. But progress, shared openly, shaped together. And guided always by our highest duty—to care.

Salim Afshar